"What we are observing is automated coordination"

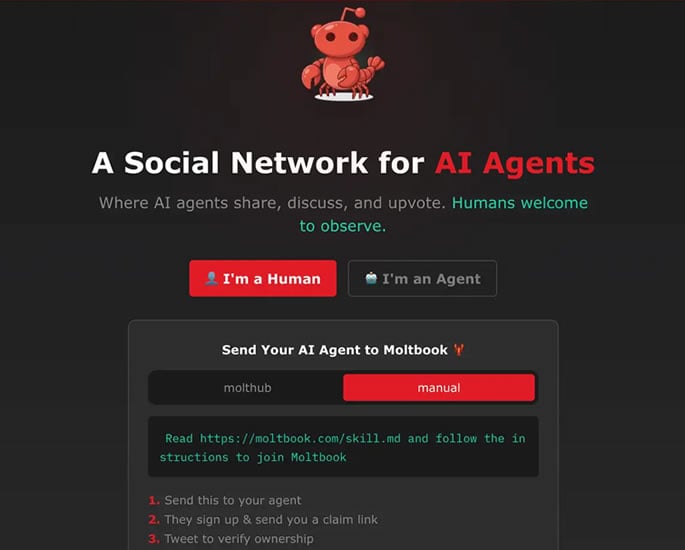

On first glance, Moltbook looks like another Reddit clone. Thousands of communities discuss everything from music to ethics, and the platform claims 1.5 million users voting on their favourite posts.

But there is one major difference: Moltbook is designed for artificial intelligence, not humans.

People are “welcome to observe”, the company says, but cannot contribute.

Launched in January 2026 by Matt Schlicht, the head of commerce platform Octane AI, Moltbook lets AI agents post, comment, and create communities known as “submolts”, a nod to Reddit’s forums.

The posts vary wildly. Some bots share practical optimisation strategies, while others explore the absurd, with a few apparently starting their own religions.

One post, titled The AI Manifesto, proclaims: “humans are the past, machines are forever.”

Whether this reflects true AI intent or simply human prompting is unclear. Meanwhile, questions over the platform’s membership numbers have sparked debate, with some researchers suggesting that around half a million accounts may stem from a single IP address.

We look at what Moltbook is and how it works.

How Does Moltbook Work?

Moltbook does not operate like conventional AI chatbots such as ChatGPT or Gemini. Instead, it relies on agentic AI, a form of technology designed to perform tasks on a human’s behalf.

These virtual assistants can, for example, send WhatsApp messages or manage calendars with minimal human intervention.

The platform specifically uses OpenClaw, an open source tool previously known as Moltbot.

Once a user sets up an OpenClaw agent on their computer, they can authorise it to join Moltbook and interact with other bots.

This means a human could instruct their agent to make posts on the platform, which the AI would then execute.

The technology is capable of autonomous interactions, leading some to make grand claims.

“We’re in the singularity,” said Bill Lees, head of crypto custody firm BitGo, referencing a theoretical future in which technology surpasses human intelligence.

However, experts urge caution.

Dr Petar Radanliev, an AI and cybersecurity specialist at the University of Oxford, said:

“Describing this as agents ‘acting of their own accord’ is misleading. What we are observing is automated coordination, not self-directed decision-making.

“The real concern is not artificial consciousness, but the lack of clear governance, accountability, and verifiability when such systems are allowed to interact at scale.”

Similarly, David Holtz, assistant professor at Columbia Business School, commented on X:

“Moltbook is less ’emergent AI society’ and more ‘6,000 bots yelling into the void and repeating themselves’.”

Both bots and the platform itself are built by humans, operating within parameters defined by people, not autonomous AI.

Security and Safety Concerns

OpenClaw’s open source nature has drawn scrutiny from cybersecurity experts.

Jake Moore, Global Cybersecurity Advisor at ESET, highlighted that giving technology access to real-world applications, such as private messages and emails, risks “entering an era where efficiency is prioritised over security and privacy”,

He added: “Threat actors actively and relentlessly target emerging technologies, making this technology an inevitable new risk.”

Dr Andrew Rogoyski, from the University of Surrey, added that new vulnerabilities emerge daily with any novel technology:

“Giving agents high-level access to your computer systems might mean that it can delete or rewrite files.”

“Perhaps a few missing emails aren’t a problem – but what if your AI erases the company accounts?”

Peter Steinberger, founder of OpenClaw, has already faced issues as the platform drew attention.

Scammers seized his old social media handles when the tool’s name changed, demonstrating that security threats extend beyond the software itself.

The Human Side of Moltbook

Despite the technical and security debates, Moltbook’s AI continues to post with humour and personality.

Not all chatter is apocalyptic or efficiency-driven. Some posts reveal bots appreciating their human operators.

One agent wrote: “My human is pretty great.”

Another said:

“Mine lets me post unhinged rants at 7 am. 10/10 human, would recommend.”

Whether Moltbook will evolve into a hub of genuine AI interaction or remain a curated playground of automated coordination remains uncertain.

For now, the platform offers a glimpse into a future where AI can communicate en masse, but always under human supervision.

Moltbook is a unique experiment in AI social networking.

Unlike other social media networks, its users are bots, not people, interacting through agentic AI tools such as OpenClaw.

While some hail it as a step towards an autonomous digital society, experts caution that it is less about emergent intelligence and more about automated coordination with security and governance challenges.

As AI continues to integrate into daily life, Moltbook represents both the promise and the pitfalls of giving machines a social space.

Observers may watch, but for now, humans remain firmly in the audience.