"Current forensic tools will not be able to detect this fakery."

Do a Google search on ‘Bollywood deepfakes’ and the results are staggering.

What appears are hundreds of thousands of links to pornography sites featuring celebrity names such as Priyanka Chopra and Deepika Padukone.

These and many more stars of Indian cinema feature in explicit sex videos all with the help of easy to use software, which allows faces to be superimposed on the original underlying faces.

It is not just Bollywood celebrities that been the victim of deepfakes, but many celebrities all over the world, especially women.

Deepfakes are a subject that only surfaced on the web in 2017, especially on Reddit which later saw the website ban it.

The subject has become a dark corner of the internet, where people in the thousands have gathered to share fake videos of celebrity women having sex.

Although it has only been around for a year, it has had a massive effect in terms of the dangers that they present.

Deepfakes is not just for pornographic reasons, it is also used for spreading false information or fake news.

Fabricated and altered media is used for misinformation campaigns to alter people’s memories of particular events.

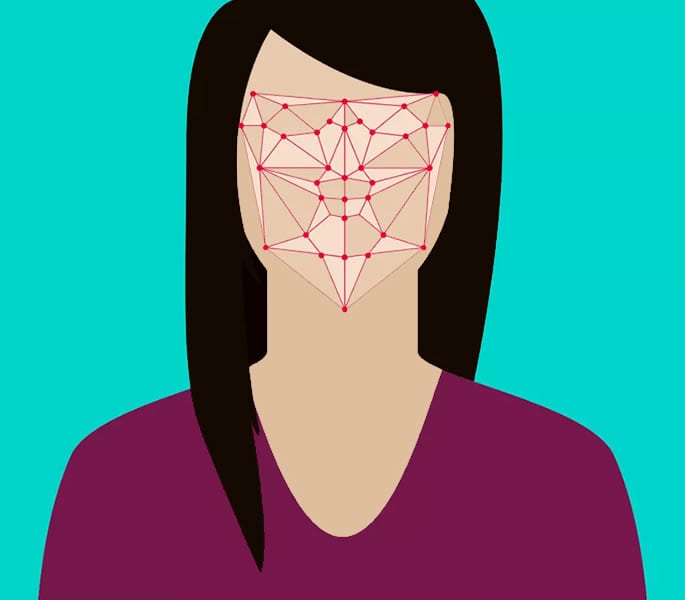

More advanced technology such as artificial intelligence and machine learning makes them easier to create and more difficult to detect.

What are Deepfakes

Deepfakes is an artificial intelligence-based human image synthesis technique using computer software.

They are a new kind of video which takes images or videos and superimposes it to create entirely new videos.

As more and more people have done it, the transformation is almost seamless that people cannot tell if it real or false.

Once the speciality of special effects studios and intelligence agencies such as the CIA.

Today, anyone is able to download deepfake software to create convincing videos in their free time.

Desktop tools such as FakeApp make deepfakes easy to create and easy to access.

In 2017, a Reddit user named Deepfakes showed how to superimpose a celebrity’s face onto someone else’s body with ease.

The user even showed how to keep the facial expressions of the celebrity.

The technology is simple to use and many people did it which led to a huge following on Reddit. Users shared tips on how to improve their work as well as their current work.

Examples include:

- “Emma Watson sex tape demo ;-)”

- “Lela Star x Kim Kardashian”

- “Giving Putin the Trump face”

Most deepfakes is the work of amateur users putting celebrities faces onto adult film stars’ bodies as a hobby.

However, someone potentially could create a convincing sex video with a celebrity superimposed in the video.

This false ‘sex tape’ could possibly go viral and have a damning effect on their celebrity status and reputation.

Fans would negatively perceive their now former idol.

It is the same with deepfakes of making politicians saying funny things. It is just as simple to create a false video of an emergency alert about an imminent attack.

The difficulty with deepfake videos is that they are not easy to spot.

Cybersecurity expert Akash Mahajan said:

“The big problems is that current forensic tools will not be able to detect this fakery.

“So the hoaxslayers and fact-checkers we now have, or even forensics experts who look out for audio glitches, shadows and visual discrepancies to spot fakes, won’t be able to help.”

These are two of the main themes of creating deepfakes which are potentially dangerous.

Bollywood Stars and Deepfakes

For almost all the videos created, the stars who have had their heads edited onto another person’s body for deepfake videos have not consented.

Several videos featuring Bollywood celebrities have appeared on sites like PornHub, who are removing them for their consensual nature.

Hundreds of non-consensual videos were found using simple keywords such as “deepfake” and “fake deep”.

In many video descriptions, they clearly mention the video’s deepfake origins and have racked up several million views.

In a statement, PornHub’s vice president said that the company will remove deepfakes and non-consensual content “as soon as we are made aware of it.”

Entering ‘Bollywood deepfakes’ brings up many links to pornographic sites.

A PornHub user profile named ‘blackandwhitepanda’ posted two videos featuring Priyanka Chopra and Deepika Padukone in explicit sex videos.

Both of which violate their rights and dignity.

Another creator profile called ‘Surya’ has posted videos of other Bollywood celebrities.

Not only do they use celebrities image without their permission, it also questions the creator’s morality.

Videos with deepfakes found include Bollywood actresses such as:

- Aishwarya Rai Bachchan

- Pooja Hegde

- Katrina Kaif

- Kareena Kapoor

Many deepfake video creators believe that their videos are not harmful to the people they portray and claim they are for ‘entertainment purposes’.

The number of videos that are available online seems to support that opinion.

Some users have denied their participation in the practice and likened the pornographic insertion of Bollywood stars’ faces to a digital assault.

Another notable Indian, though not a Bollywood star who was a victim of a deepfake video was Arvind Kejriwal.

His speech was allegedly faked during the Punjab election, suggesting that he wanted people to vote for the Congress.

With more sophistication, this is something that people could view as the truth which is the future danger of deepfaking.

Future Technologies

Deepfake technology has leapt light years ahead since its inception only a year ago and face-swapping apps were readily available on most smartphones.

Dr, Nicola Henry, associate professor at RMIT specialising in sexual violence spoke of the reasons for a person’s intention to create a deepfake video. She said:

“Their motivations might be to cause humiliation and embarrassment, or to obtain sexual gratification as a form of entertainment.”

CEO Andreas Hronopoulos of adult entertainment company Naughty America says:

“We see customization and personalization as the future.”

Giving its customers the ability to use a paid service for having their faces superimposed on porn stars in different environments and scenes.

In a short space of time, deepfakes have become very convincing especially as machine learning has evolved.

A celebrity’s facial expressions match with the new imagery at a scary level of photorealism, it is difficult to know what is real and what is fake.

Sophistication only makes this more difficult to detect and if it is a political address which is a deepfake, it is likely that people believe it.

As with many new technologies, the scariest part is unknown.

Like the “fake news” that we are all aware of, deepfakes present another capacity for the internet to breach our reality.

No doubt the software technology in this area will improve further in the future.

So, if nearly every video clip is a deepfake, who will know what is real or not?