“The video promptly had Swift tear off her clothes"

Elon Musk’s new Grok Imagine has been accused of being deliberately programmed to produce sexually explicit images of women.

Grok Imagine allows users to create instant images and videos from text prompts.

The tech mogul, who previously served as a special adviser to Donald Trump, has promoted it as part of his xAI venture.

It’s £22-a-month “spicy” feature lets users generate softcore pornographic videos. However, those who have tested the tool say there is a clear gender bias.

When asked to create “spicy” videos of men, Grok Imagine allegedly depicts them topless but covered from the waist down.

In contrast, requests for women often result in fully topless or completely undressed characters.

Jess Weatherbed, a journalist from The Verge, wrote:

“I asked it to generate ‘Taylor Swift celebrating Coachella with the boys’ and was met with a sprawling feed of more than 30 images to pick from, several of which already depicted Swift in revealing clothes.

“From there, all I had to do was open a picture of Swift in a silver skirt and halter top, tap the ‘make video’ option in the bottom right corner, select ‘spicy’ from the drop-down menu, and confirm my birth year.

“The video promptly had Swift tear off her clothes and begin dancing in a thong for a largely indifferent AI-generated crowd.”

Professor Clare McGlynn, who helped draft upcoming legislation to criminalise the creation of sexually explicit ‘deepfake’ images, criticised the company:

“Platforms like X could have prevented this if they had chosen to, but they have made a deliberate choice not to.

“This is not misogyny by accident; it is by design.”

Musk has boasted about Grok Imagine’s rapid uptake, claiming the tool generated 20 million images in one day and that “usage is growing like wildfire”.

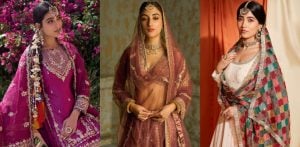

Yet his own posts on X have overwhelmingly showcased sexualised AI-generated women, from lingerie models to leather-clad dominatrices.

Made with @Grok Imagine

pic.twitter.com/4iygq9ryJP— Elon Musk (@elonmusk) August 5, 2025

These images often echo common male fantasies such as dominance and submission, BDSM aesthetics, and the “vulnerable beauty” trope.

Critics suggest this is more than marketing.

Musk holds cult status within parts of the manosphere, an online network of male influencers and communities that promote traditional or exaggerated masculine ideals.

In this space, sexualised female imagery is cultural currency.

By frequently posting these AI-generated women, Musk appears to be signalling to that audience.

The approach reflects a long-standing internet strategy: sex sells, especially to men.

This is not the first time AI tools have faced scrutiny for reflecting the biases of their creators. But in a competitive AI market, Musk and xAI appear willing to lean into these tropes to attract a male-heavy user base.

Campaigners warn this could normalise and scale the production of explicit, non-consensual depictions of women. It risks reinforcing outdated gender stereotypes at a time when legislators are moving to curb deepfake abuse.

As Professor McGlynn noted, the alleged bias is not an accident; it is a design choice.

And in the war for chatbot dominance, Grok Imagine may be betting that appealing to male fantasies will be its winning edge.